In this paper, we present an efficient and robust deep learning solution for novel view synthesis of complex scenes. In our approach, a 3D scene is represented as a light field, i.e., a set of rays, each of which has a corresponding color when reaching the image plane. For efficient novel view rendering, we adopt a two-plane parameterization of the light field, where each ray is characterized by a 4D parameter. We then formulate the light field as a 4D function that maps 4D coordinates to corresponding color values. We train a deep fully connected network to optimize this implicit function and memorize the 3D scene. Then, the scene-specific model is used to synthesize novel views. Different from previous light field approaches which require dense view sampling to reliably render novel views, our method can render novel views by sampling rays and querying the color for each ray from the network directly, thus enabling high-quality light field rendering with a sparser set of training images. Per-ray depth can be optionally predicted by the network, thus enabling applications such as auto refocus. Our novel view synthesis results are comparable to the state-of-the-arts, and even superior in some challenging scenes with refraction and reflection. We achieve this while maintaining an interactive frame rate and a small memory footprint.

Demo

Real Time Demo

Overview

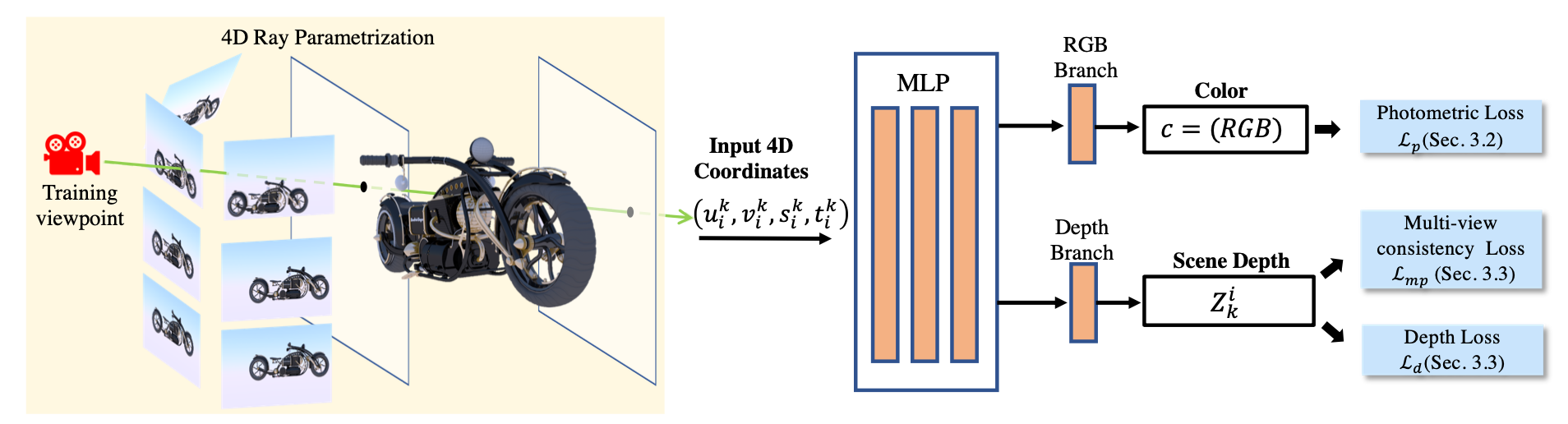

For a set of sampled rays from training images, their 4D coordinates and the corresponding color values can be obtained. The input for NeLF is the 4D coordinate of a ray (query) and the output is its RGB color and scene depth. By optimizing the differences between the predicted colors and ground-truth colors, NeLF can faithfully learn the mapping between a 4D coordinate that characterizes the ray and its color. We also build a depth branch to let the network learn the per ray scene depth by self-supervised losses

Results Video

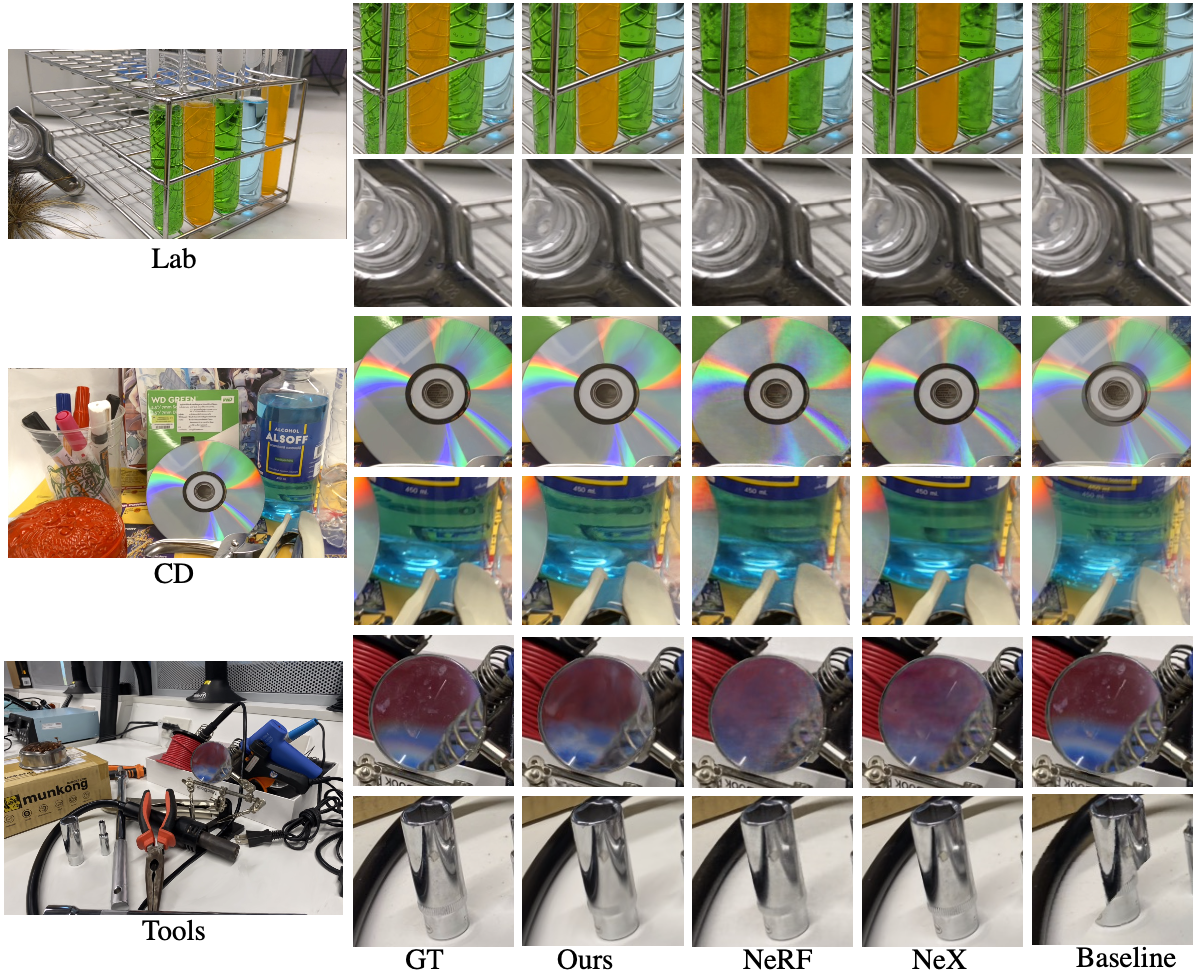

Qualitative Results

Qualitative Results on test views from shinny dataset. Our method captures more details on the reflection and refraction areas of the scenes.

Bibtex

Related works

-

Light Field Networks: Neural Scene Representations with Single-Evaluation Rendering. NeurIPS 2021.

Vincent Sitzmann, Semon Rezchikov, William T. Freeman, Joshua B. Tenenbaum, Fredo Durand -

Light Field Neural Rendering. CVPR 2022.

Suhail, Mohammed, Carlos Esteves, Leonid Sigal, and Ameesh Makadia -

Learning Neural Light Fields with Ray-Space Embedding Networks. CVPR 2022.

Benjamin Attal, Jia-Bin Huang, Michael Zollhöfer, Johannes Kopf, Changil Kim